A research paper describes a new system that theoretically enables you to build a relatively accurate 3D model of a human face from a still image.

The paper, developed by Shunsuke Saito, Lingyu Wei, Liwen Hu, Koki Nagano and Hao Li, of the University of Southern California and the USC Institute for Creative Technologies, is based on deep neural networks. They explain:

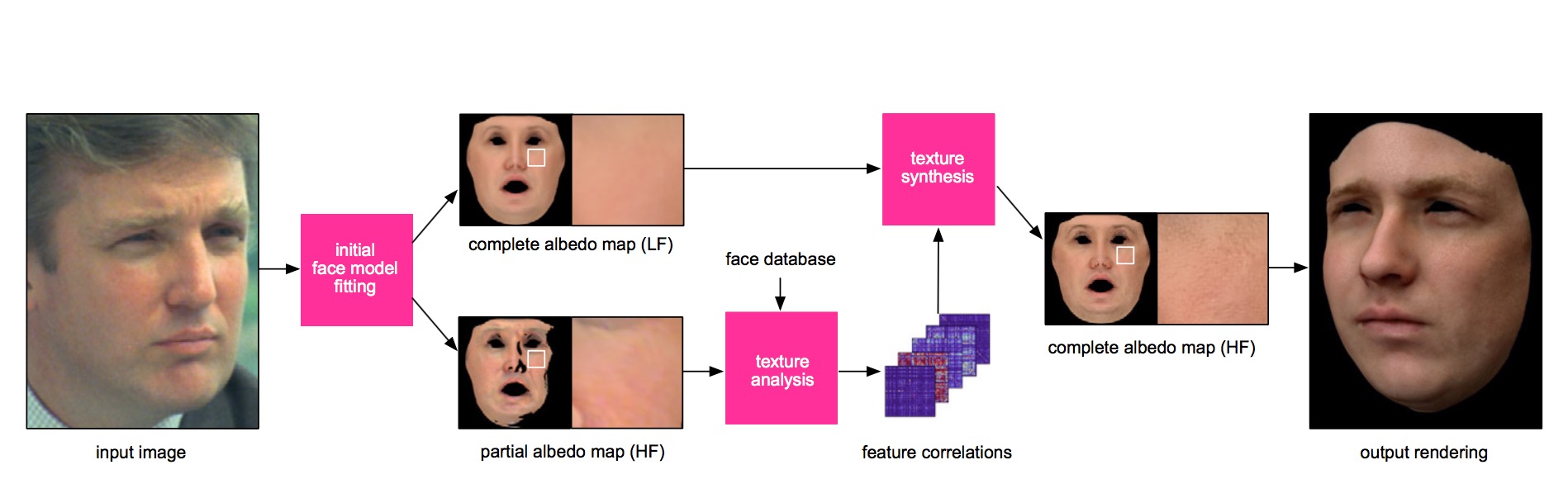

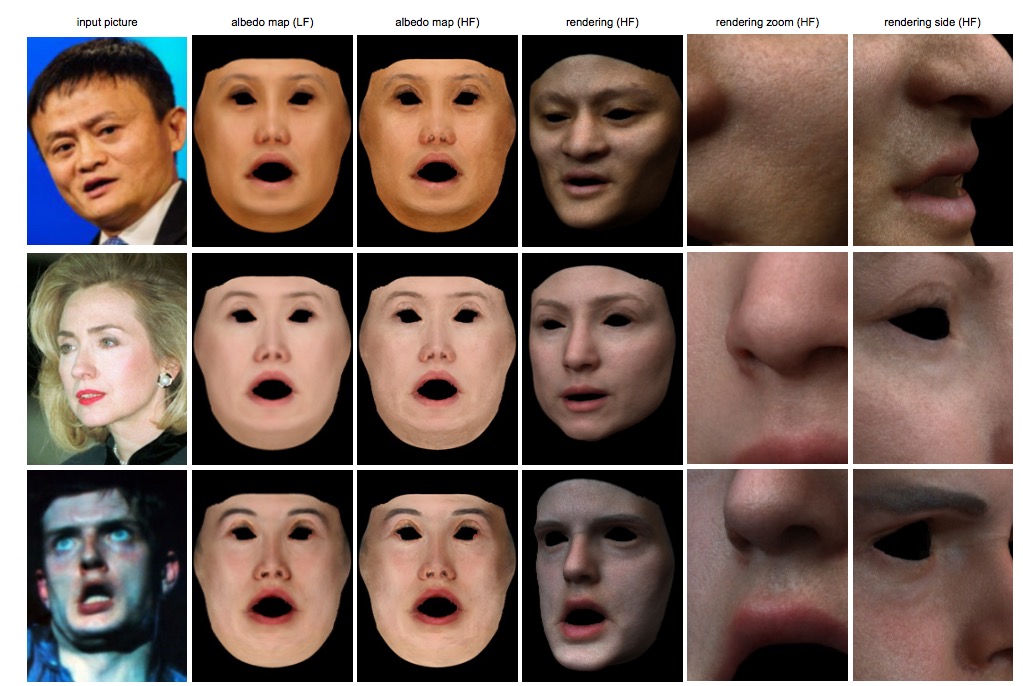

We present a data-driven inference method that can syn- thesize a photorealistic texture map of a complete 3D face model given a partial 2D view of a person in the wild. After an initial estimation of shape and low-frequency albedo, we compute a high-frequency partial texture map, without the shading component, of the visible face area. To extract the fine appearance details from this incomplete input, we intro- duce a multi-scale detail analysis technique based on mid- layer feature correlations extracted from a deep convolu- tional neural network.

Here is a video showing the results of the experimental system after using common 2D images, and it’s impressive:

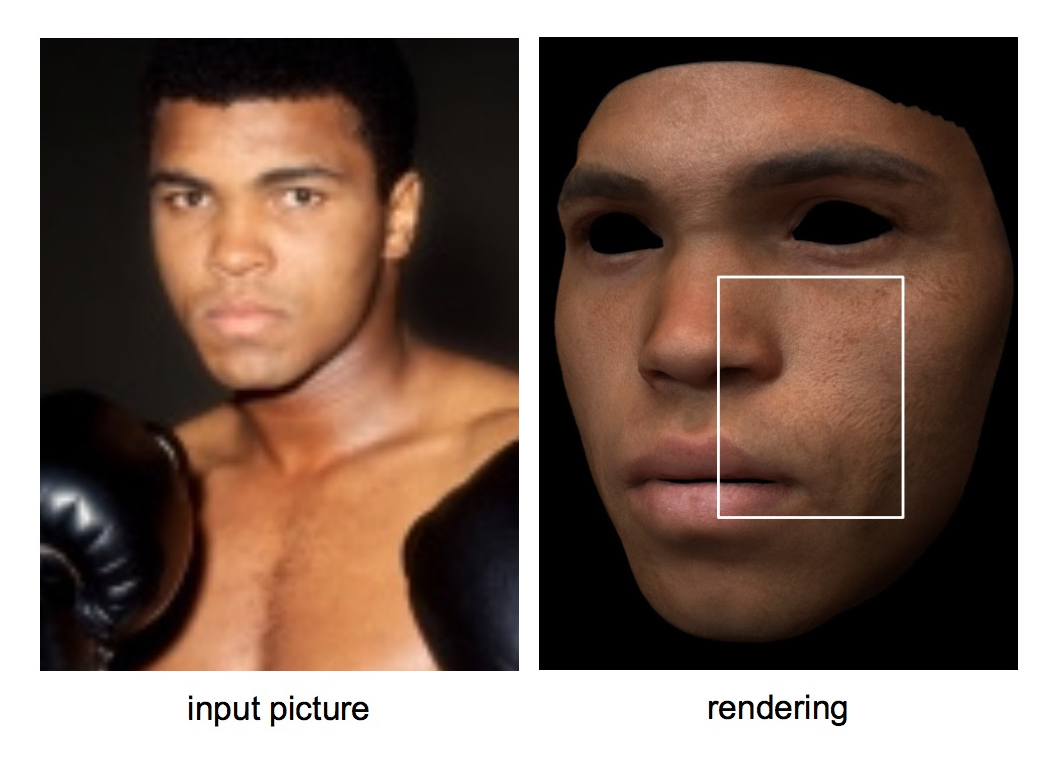

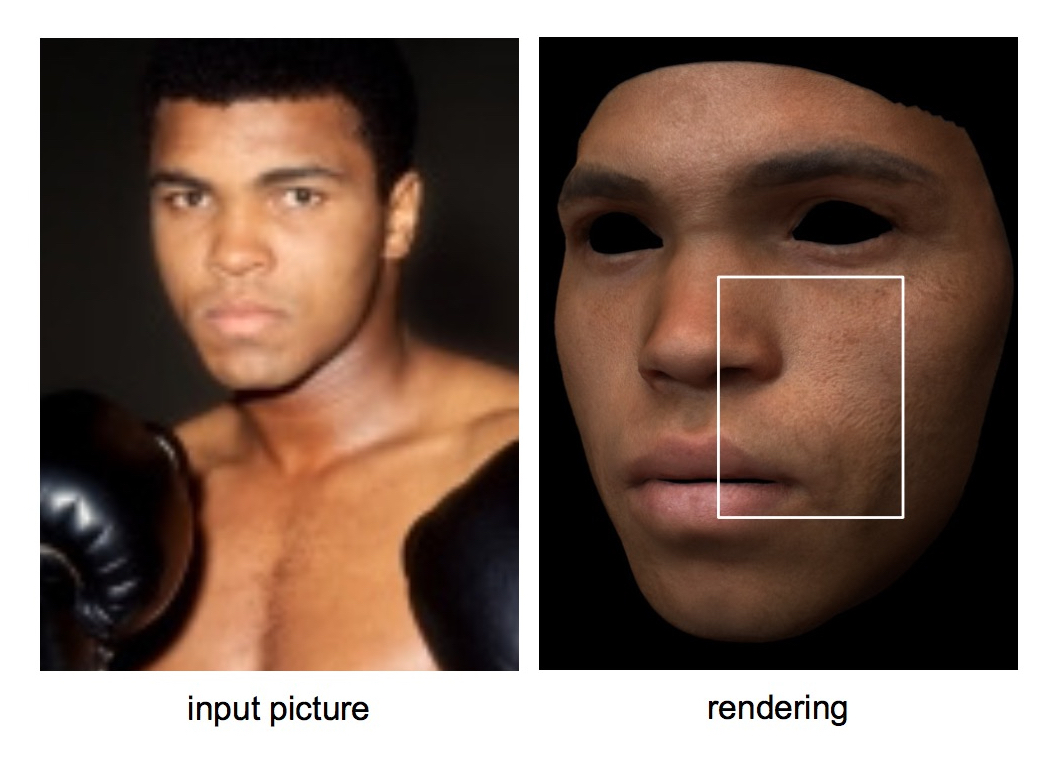

Successfully capturing a human face is a tricky business, even when equipped with dedicated 3D hardware and software to do so. you must have appropriate lighting from all sides and a lot of patience. However, this project appears to go well beyond those limitations by using a single image as input – and that image, as you can see in the video and these samples, can be pretty terrible for lighting and detail.

How do they do this? You’ll have to read the entire paper, but they make heavy use of a variety of mathematical techniques to analyze the inputs and map them to output 3D models.

It’s incredible to see how well this system works, considering the rotten quality of some of the input images, which are blurry, poorly-lit and taken at awkward angles. In a sense, the system is simulating what your brain does when it sees similarly bad inputs – it organizes it into a face concept.

While the system is ultimately intended for quickly rendering 3D avatars for subjects to be used digitally, I suspect the system could easily be adapted to produce reasonable quality 3D models for printing as well.

Since the entirety of this system is software that processes an image, one could easily imagine a software module that could use the webcam on your laptop to rapidly produce a usable 3D model of your face. That’s something no one’s been able to do yet.

Via arXiv