I caught a glimpse of a potential future for 3D modeling tools, and it’s breathtaking.

Now before you get too excited, this is entirely my speculation. It’s based on a new open source tool I’ve been fiddling with, DALL-E Mini.

DALL-E Mini is an open source machine learning project that does something incredible that requires only six words to describe:

“Generate images from a text prompt”

The system has examined millions and millions of online images and their associated captions. From this it has built the ability to “draw” images based on those learnings. It is even able to combine different concepts together in the same output. Think of it as you specifying the caption and the system filling in the image.

That output is a selection of rough 2D images that the systems believes captures the input textual request.

You could, for example, ask for “Queen Elizabeth”, and you’d get images like this:

Sure, these are not as precise as photographs, but remember, we’re basically asking the AI to hand-draw a picture. Could you do as well? This is perhaps the best of the Queen Elizabeth results:

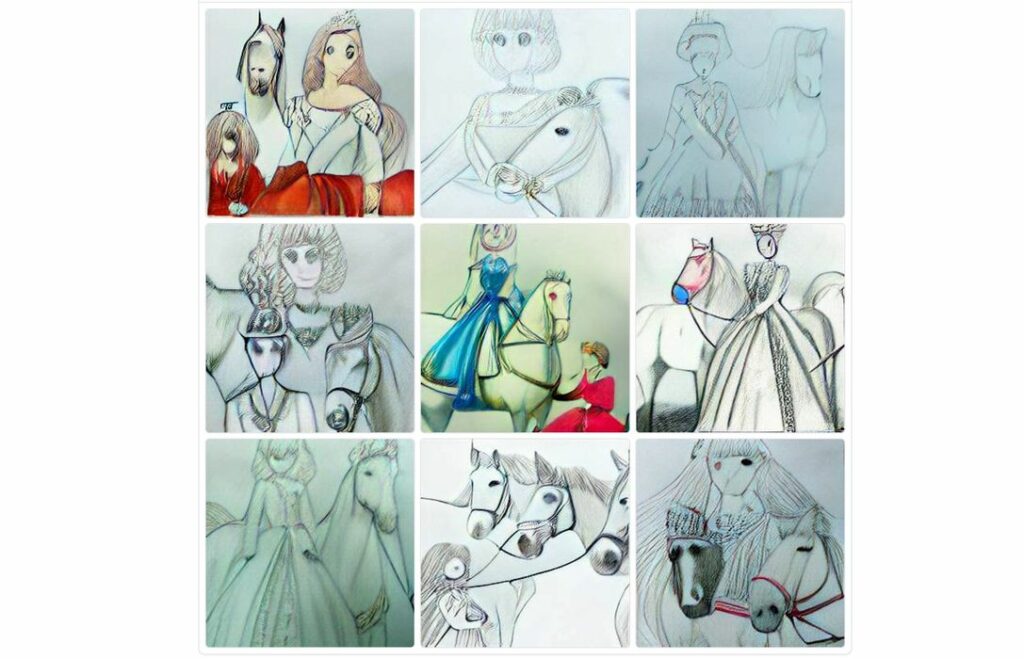

But you could make it more complex, for example asking for “anime drawing of Queen Elizabeth with horses”, and this is the result:

Not too bad!

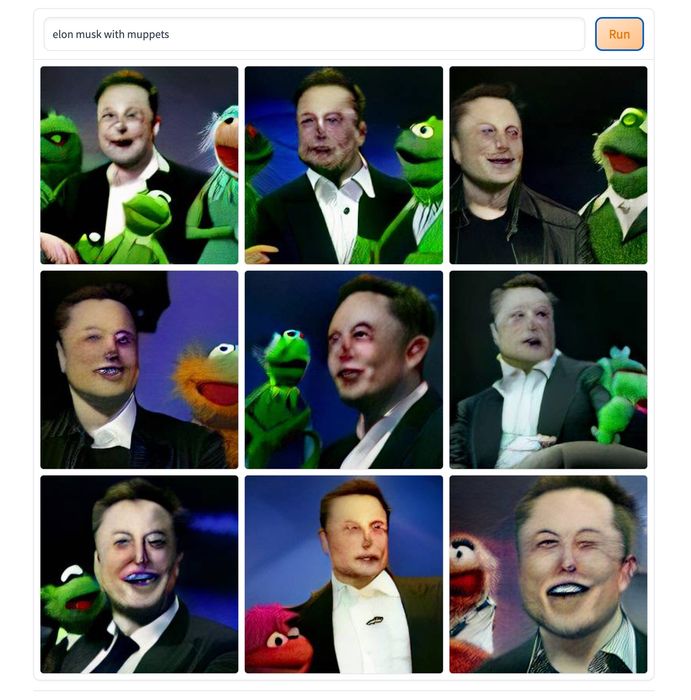

But hold on a moment. While most users of the Dall-E Mini demo ask for silly things like “Darth Vader on Seinfeld”, or “Elon Musk with Muppets”, what if you asked this system for a picture of a mechanical part? Let’s give it a try, asking for a “y adapter with threads”. The result:

And here’s a re-run to get more results:

Some of those images are actually y adapters. At top might be the best one.

Let’s try something else, “circular heat exchanger made of copper”. The results:

This is incredible, but of course those parts are not necessarily feasible and functional. However, some of the y adapters might be.

What if we wanted an art piece? I tried “bracelet made of skulls”. The results:

Those designs are really quite good.

But they are all only 2D images, and pretty rough ones at that.

But let’s think about this process a bit more. What if, instead of training the system using random images from the internet with captions, we trained the system using online parts catalogs for mechanical parts? What if we used the CAD models for those parts instead of the images of the parts?

What if we adjusted the form of the results to output a CAD or 3D model instead of a 2D image?

What if…

What if we asked that system for a “27mm threaded y adapter at 45 degree angle”?

We just might get the exact 3D model we wanted, or at least a few versions to choose from.

The AI system would not be pulling a canned 3D model from a repository, it would instead be literally drawing it from scratch to meet the requirements. This would enable all sorts of variations that are currently not available. Need a 7mm version but there are only 5 and 10mm versions available? No problem!

As I mentioned above, this is pure speculation on my part, but the concept appears to be reasonable. However, it may require massive amounts of input, huge computing resources and a ton of tuning. But possibly feasible.

Such a system might first be used for artistic 3D models, as they could have lower requirements for precision and accuracy than mechanical parts. But I think at this point you get the idea of where this concept could lead.

It would lead to a world where you simply ask for something and it would be designed for you, at least for simple parts. But from simple things, more complex things often grow.

It wouldn’t surprise me if someone is already secretly working on this concept, somewhere. But if not, someone definitely should.