There’s an entirely new way to 3D scan objects, and it’s going to change everything.

Traditionally there have been only a few standard methods used by manufacturers to build 3D scanners:

Structured Light, in which a known pattern of light is projected onto an object. The seen deviations from the standard pattern hint at the geometry of the object. Software then reconstructs the 3D geometry after examining multiple views.

Depth Ranging, where either an array of infrared pixels or sweeping laser beam calculates the distance to each point in a scene. By collecting this data from multiple viewpoints, it’s possible to reconstruct the object’s geometry.

Photogrammetry, which uses a large series of standard optical images of an object taken from multiple angles. The idea is to estimate the position in 3D space of each portion of the image by observing how the background shifts. Complex software then reconstructs the object’s geometry.

Virtually every 3D scanner you encounter will use one of these approaches to obtain a 3D scan. They’re all quite successful, although each has limitations of one kind or another. Such equipment has been widely used in industry for reverse engineering parts, capturing historical artifacts, measuring body parts for custom products, digitizing physical assets for games or AR functions, and even just for fun sculpture prints.

Recently a wave of breakthrough AI applications has emerged, with perhaps the most notable being GPT-3, a system that can create long text passages from a simple prompt, or DALLE-2, which can generate astonishing images on demand from a simple text caption.

The success of these systems has led to an explosion of different AI-powered applications for different purposes. One of them turns out to be 3D scanning.

NeRF – Neural Radiosity Field

The new technology is called “NeRF”, or “Neural Radiosity Field”. Don’t bother looking it up in Wikipedia, it’s that new.

Let’s get you up to speed. There is a thing called a “Radiosity” in computer graphics. It’s a mathematical approach to solving the problem of light diffusing when rendering a 3D image. As you might imagine, an image contains not just the light from an object, but also all the reflections, blurring, shadows and other effects. It’s a complex problem that has been solved and powers many graphics scenarios today.

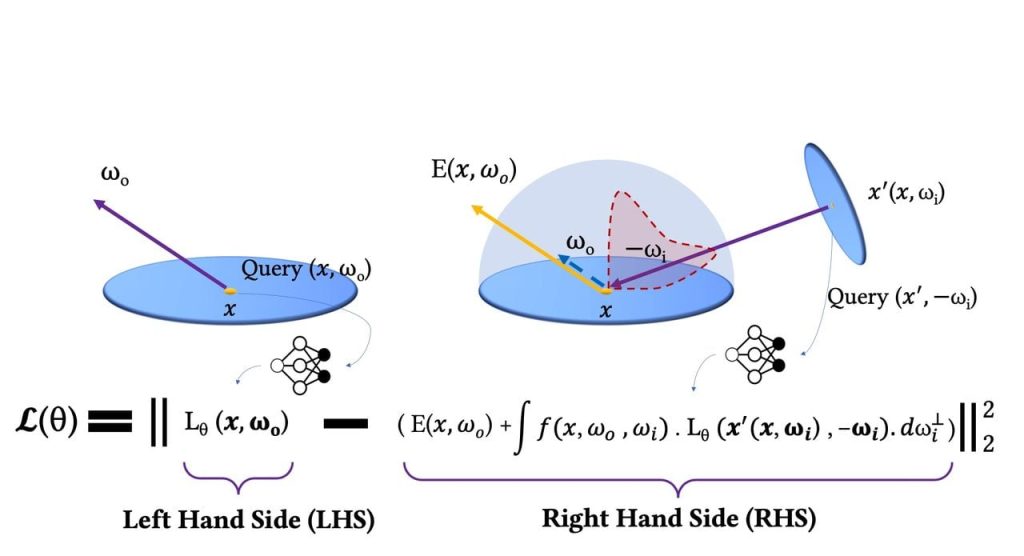

The new development is based on a 2021 paper entitled, “Neural Radiosity”, which proposes combining neural networks with the radiosity concept.

Basically what happens is that a neural network is trained using a series of optical images of a subject taken from a variety of angles. This part is similar to photogrammetry, but the processing is quite different.

Once the neural network is trained, it can then generate images — much like DALLE-2, except instead of a text prompt, the NeRF accepts input angles and elevations. From those it generates the “right” image for that orientation.

It’s then possible to generate views of the subject from angles where no image was actually captured.

For 3D scanning purposes, these images can then be used in a manner similar to photogrammetry, except with more precision because the generated images are consistent and “perfect”.

So far I’ve found only one scanner application that uses the NeRF concept, Luma AI. This app is in beta testing, and I’ve been able to do some testing with the app. So far I’ve found the NeRF method to be far superior to almost any other scanning method I’ve used, and I’ve used many.

Look for a hands-on review of the Luma AI app coming soon to these pages.

Via ArXiv