Researchers at NVIDIA have created a unique method of producing 3D models from 2D images.

If that sounds like photogrammetry, it should. Photogrammetry is the process of capturing images of an object from a series of different angles, and then processing them in software to interpret a 3D model. This is a process that’s commonly used these days and there are multiple software options available, including for mobile devices.

However, it’s not without problems. There are three main issues.

- The images captured must be of exceptional quality, with visible patterns, consistent focal length, uniform lighting, precise focus and numbering in the hundreds

- The processing time to generate a 3D model can be considerably lengthy

- The resulting 3D models are often low quality unless perfect capture conditions existed

As a result photogrammetry isn’t being used as much as it might. But now researchers at NVIDIA have developed a new approach that could make things much better.

The key is in the image processing.

Traditional photogrammetry software attempts to create a point cloud by comparing the positions of recognizable background patterns as the viewing angle changes throughout the images. Based on these angular calculations, each pixel is given a position in 3D space. The result is a messy point cloud, with points sprayed across the scene, hopefully representing the 3D object.

Usually there are tons of outlying points that, for one reason or another, ended up in the wrong place. This is usually the result of poor imaging conditions. Reflections, out of focus, and other issues can easily cause stray points to occur. They can also slightly misposition the points that are correct.

A second step then occurs to “clean” the point cloud by simplifying it and removing obviously wrong points.

The final stage is to knit those points together by wrapping a skin of triangles around them. There are multiple algorithms for doing so, but the issue is that due to the slight mispositioning of points, the surface generated tends to be wobbly. This is especially evident if scanning a flat surface: it’s NEVER flat in the captured scan.

The NVIDIA researchers used a different approach. Their algorithm begins with a basic 3D shape, say a sphere or torus, and then gradually distorts it, iteration by iteration. This is quite analogous to using a 3D sculpting tool like ZBrush, where a basic shape is distorted into the desired shape. However, here the process is automated and matched to the input images.

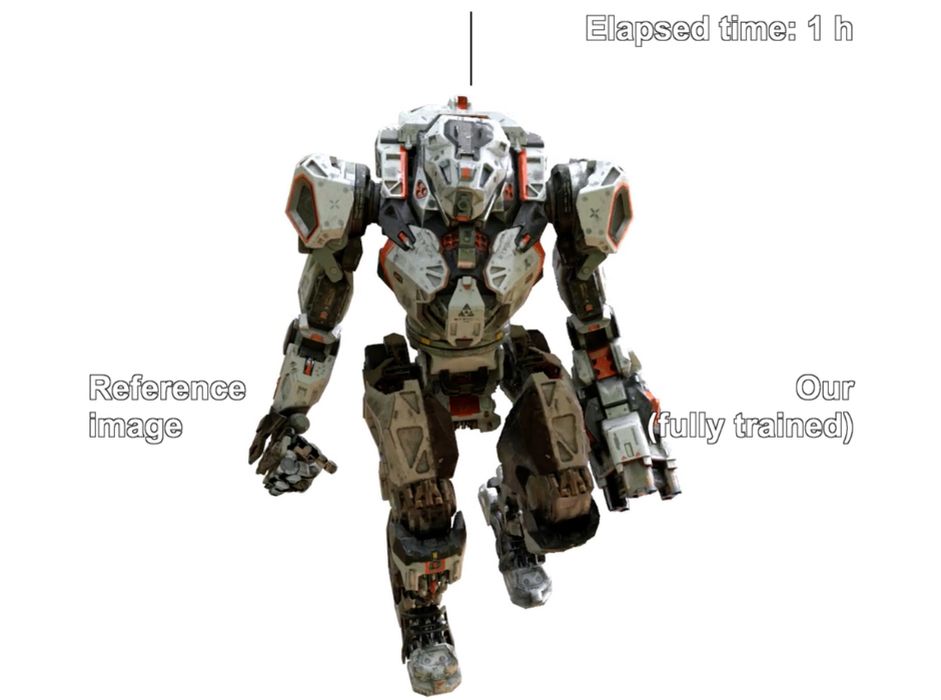

The results are impressive. Compute time is significantly faster than conventional point cloud generation and processing, and the generated 3D model has much greater quality. The algorithm is also able to capture textures correctly even in varying lighting conditions.

The algorithm requires, as you might suspect, processing capacity on NVIDIA GPUs, and fast ones at that. Nevertheless, this is an approach that could eventually change the way we capture 3D scans for complex organic objects.

As of now this is just research, but the results are so impressive it’s hard to imagine NVIDIA or others not taking this forward to commercialized products.

Via NVIDIA, ARXIV (PDF) and GitHub