This could be the biggest breakthrough in 3D printing in decades: automatic 3D models.

Luma AI has this week released a closed beta test of a new service, “Imagine 3D”, that can automatically generate 3D models from a user-specified text prompt.

Wait, what?

It goes like this: you type in a sentence describing what you want. An example could be: “Elephant with raised trunk and two tusks standing on a log”. After a short time, the system returns with a full color, 3D model of exactly that.

It’s pure magic.

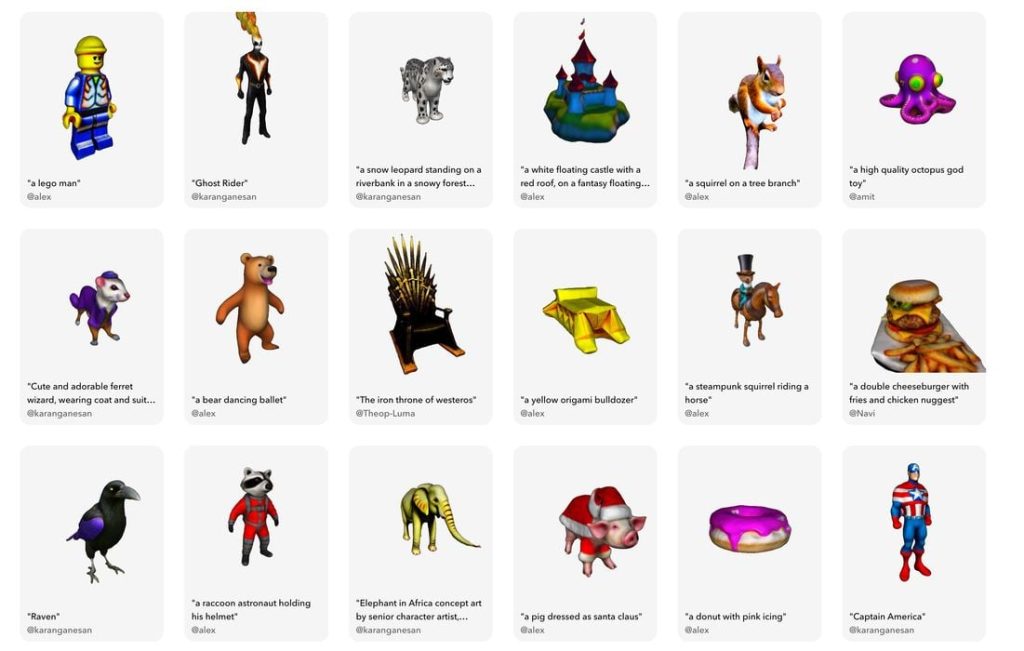

The few fortunate Luma AI beta testers that have access are now generating dozens of 3D models that are accumulating in the “capture” section of Luma AI’s website. They are simply random items. Here’s a few examples:

This one is generated from “A screaming crow”. Just that: “a screaming crow” and no other input was provided. Incredible.

Note that this is an actual 3D model; you can spin it around and see the crow in all dimensions, just as if someone had modeled it in a CAD program.

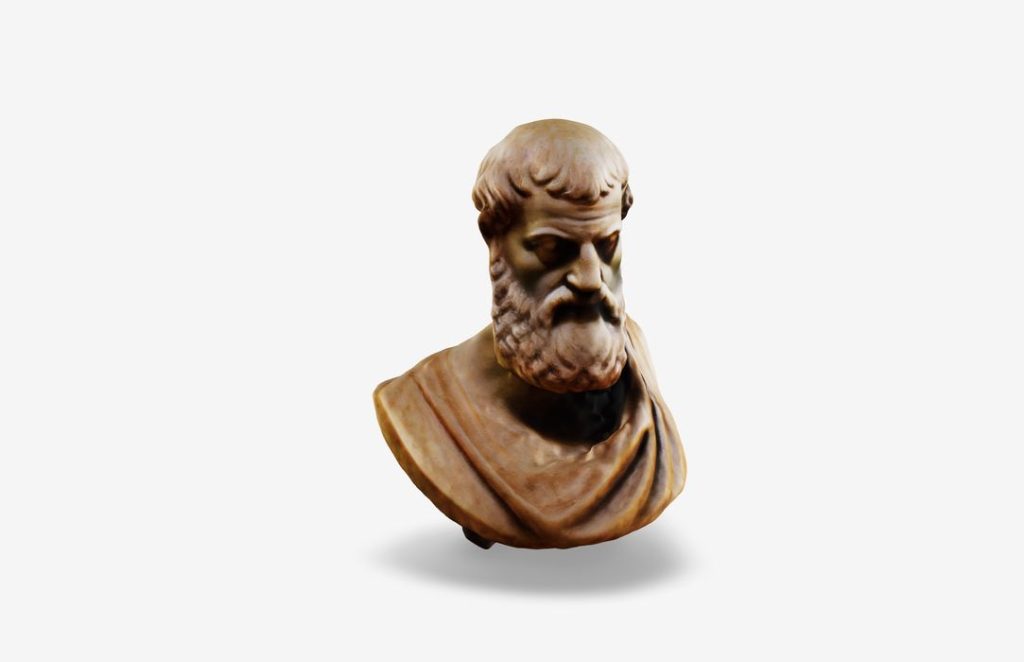

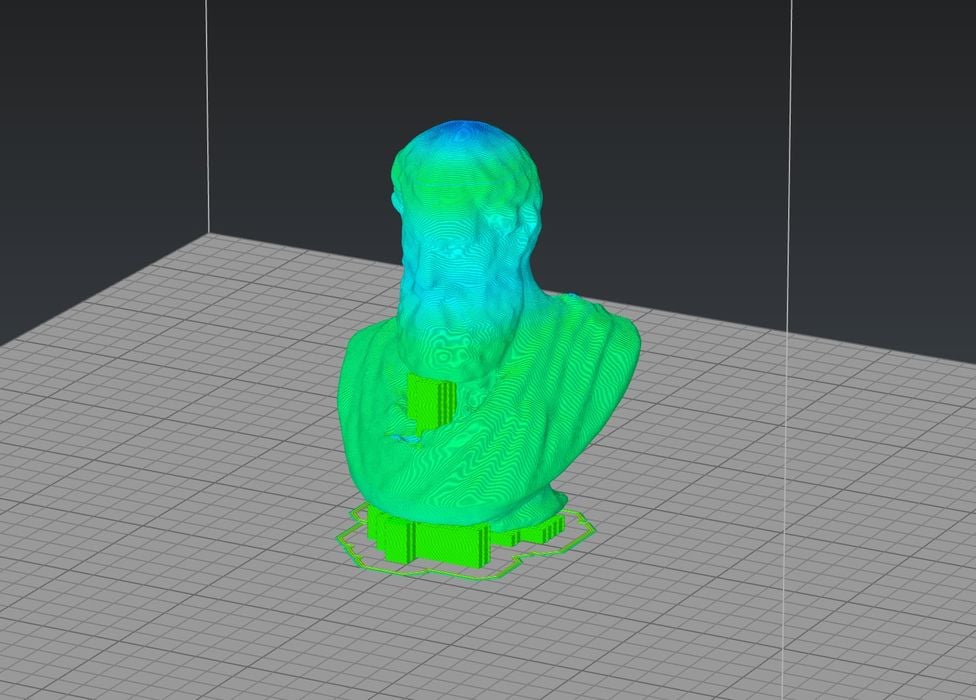

This one is generated from “A highly detailed bust of Aristotle”. Note that it looks a bit better than it should due to the color texture.

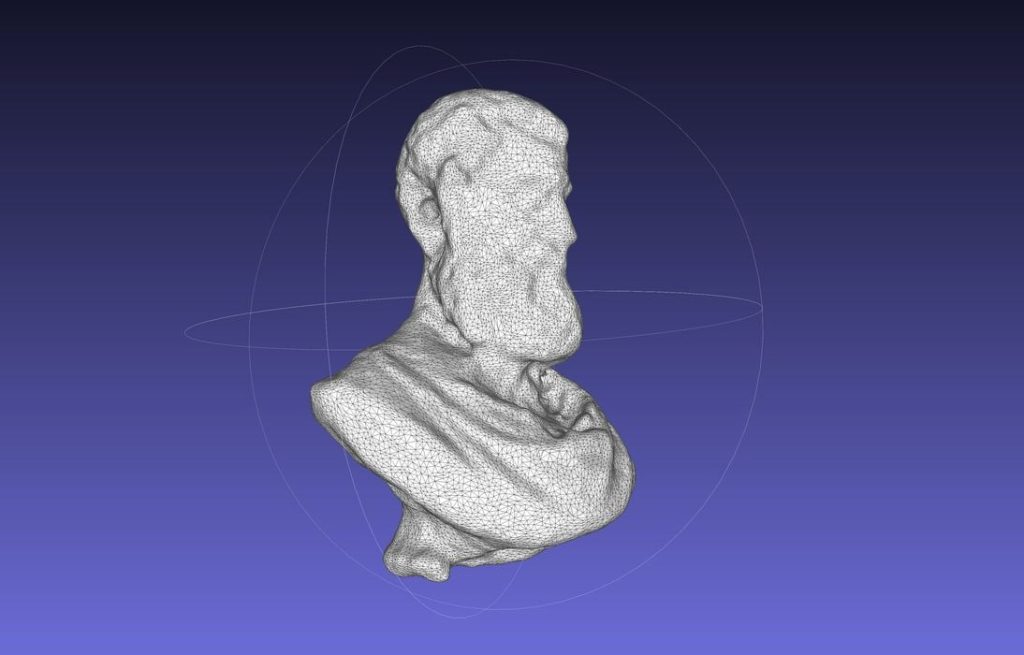

All of these models are exportable in both GLTF and OBJ formats. I chose to download “Aristotle” and take a closer look.

After removing the texture, you can see that it really isn’t the greatest 3D model. You couldn’t say that it is “highly detailed” as per the prompt.

But it is a 3D model. From a text prompt. Which is utterly amazing.

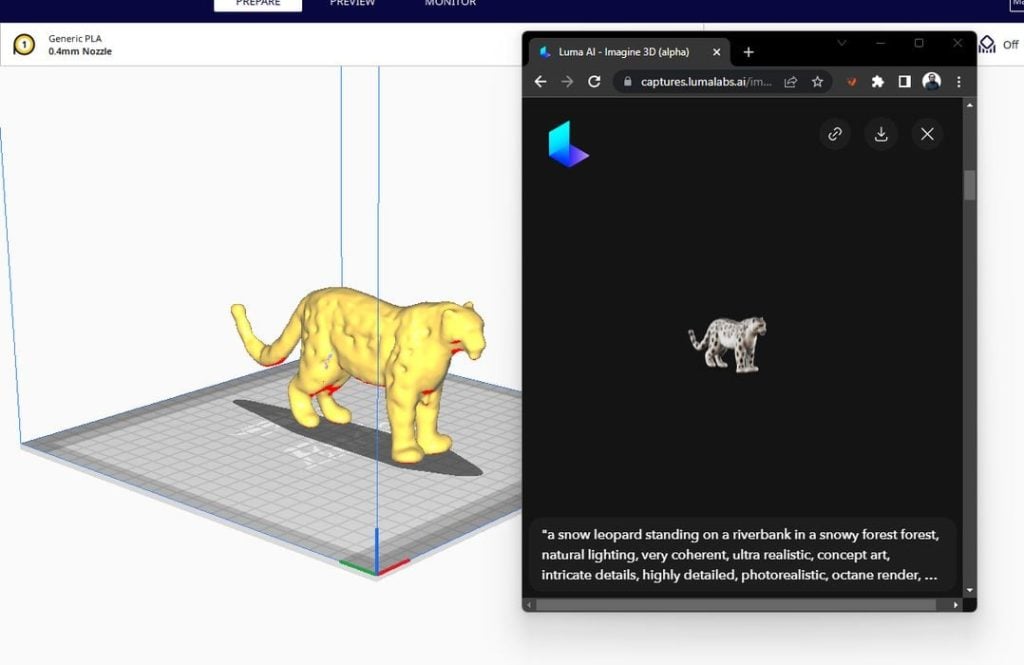

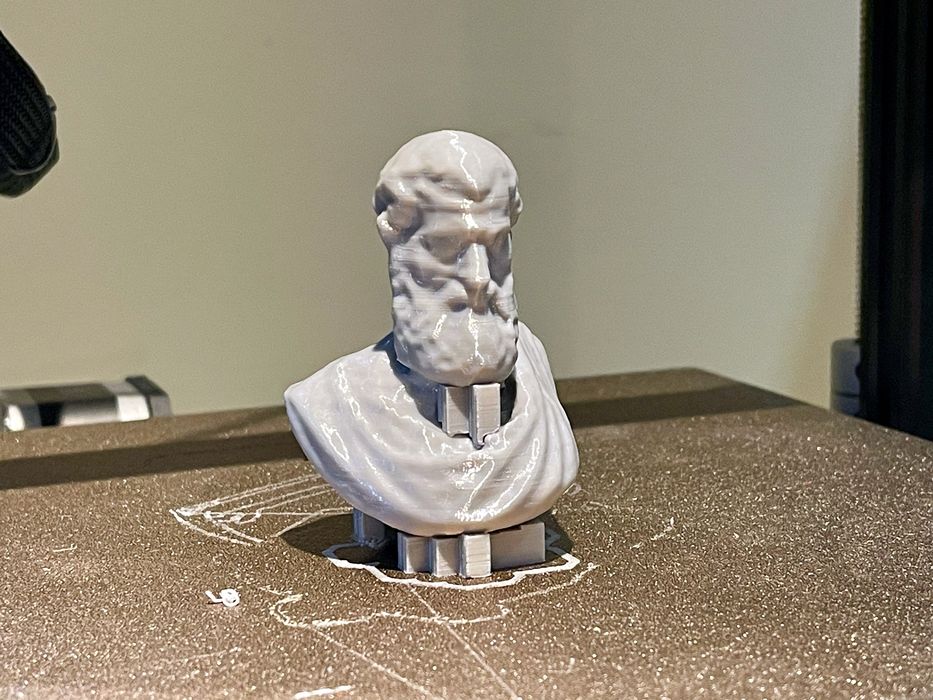

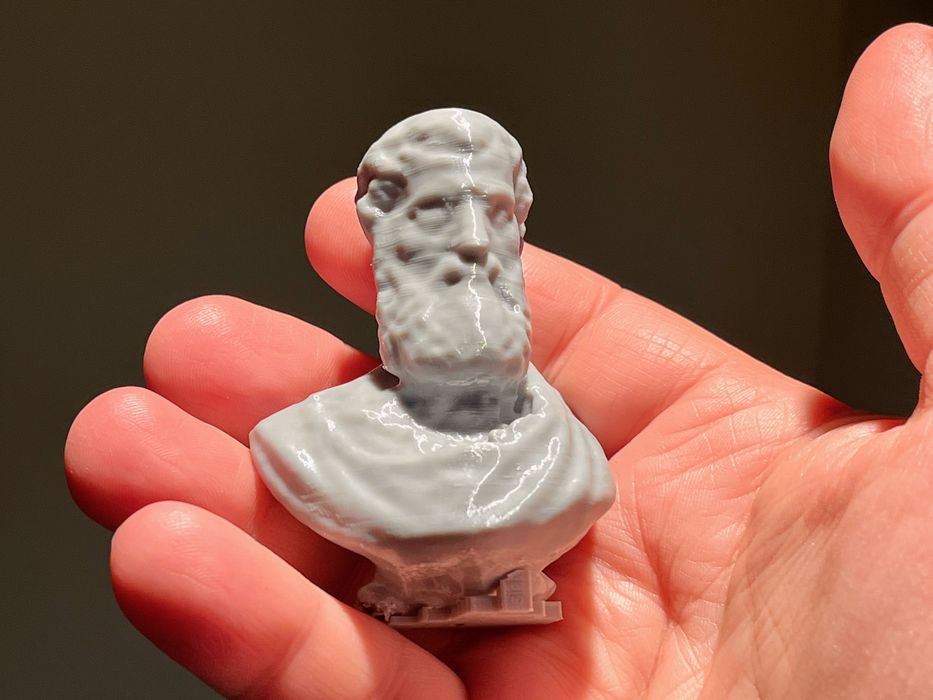

These can indeed be 3D printed, as one participant in the beta test has been doing above.

I had a suspicion this capability would be soon developed after seeing text to image services a few months ago. At the time, I thought it was something “Beyond Generative Design”.

In today’s 3D modeling “generative” refers to the process of specifying mechanical and other constraints and letting an AI generate (or “figure out”) an optimal 3D solution. What Luma AI has done here is not that at all. There are no constraints; it’s just like creating an image from a prompt, except it’s a 3D model.

It’s just magic. Oh, I said that already, but it needs to be said again.

I 3D printed Aristotle to see how it came out, and also to demonstrate the end-to-end process of imagining an item and producing it in real life, and it definitely works.

How does Luma AI make this work? It hasn’t yet been described by the company, but it’s likely somewhat related to their existing 3D scanning technology, which I’ve been testing extensively.

Their beta 3D scanner is easily the best I’ve used, as it is able to overcome common problems of lighting, reflectivity and other challenging conditions. Their app is also extremely easy to use as it guides you through a specific scanning process in a way no other app does.

Their scanning app takes a number of still images and creates an AI model using “NeRF” technology (Neural Radiosity Field). This model can then recreate a 2D view of a subject from any given angle, even those where no image was taken. Thus you can “spin” a 3D model to see all side.

But from there it would be a simple matter to transform those views into an accurate 3D model. It may be that Imagine 3D injects multiple 2D views of a generated subject image into that same scanning process to create the 3D model.

Regardless of how it’s done, it definitely works.

I’m on the waitlist to try out Imagine 3D, and when I get into it, I will provide more details.

In the meantime, it may be time to think about the staggering implications of this technology, but that’s something for another post.

Via Luma AI